The importance of having a content management tool such as the content crawler has increased manifold. Having an enterprise search that will enable the enterprise search engine to crawl URLs and various sites to search for specific keywords, is an essential component of the way organizations function with SharePoint 2013.

Becoming virtually an indispensable component of the SP 2013, the content crawler is now, an essential constituent that uses various content sources such as the HTTP, documentation, file sources and user profiles. For those who were looking to achieve the best content crawler within the organization then, here’s how you can evolve your current enterprise search.

Achieving the ideal content crawler

A content crawler architecture should be designed in a way that will enable you to engage in fresher content with the help of an index. One can run multiple crawl sessions in an advanced content architecture.

Often with the help of a configured content source, continuous crawling can enable better crawling capabilities. By simply enabling the content source, one can easily achieve the best content architecture.

Yet, developing a foolproof content crawling architecture is vital, not only can one gather the best of technological experts to develop this structure, but it is also important to adopt their technologies with the aid of knowledge experts.

That’s how technological experts such as HCL Tech have constantly engaged themselves in developing the ideal content architecture for companies who have benefited and developed their enterprise search in a better way.

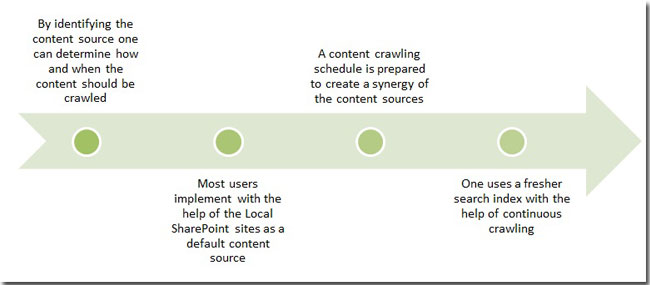

Here’s how one can benefit from continuous crawling from SharePoint 2013:

- SharePoint builds a great synergy with content sources and the content crawling schedules

- One has the option of continuous crawling with the SharePoint server 2013 index. This should ideally be configured initially as it helps one to build a fresher search index.

- According to the new design architecture of SharePoint 2013, there are zero hassles in maintaining the freshness of the content. The site administrator and the owner can maintain the freshness of the content by conducting parallel continuous crawls.

- The thought of controlling the number of crawl sessions that are undertaken, should no longer be a worry. One can easily control these crawl sessions by undertaking the crawl impact rule on the simultaneous sessions being held.

Optimizing enterprise search is not too much of a trouble, when one can engage the best of technological consultants to build a robust content crawling architecture such as those built with SharePoint 2013.

To know more about the topic you can read the whitepaper written by experts at HCL Technologies.